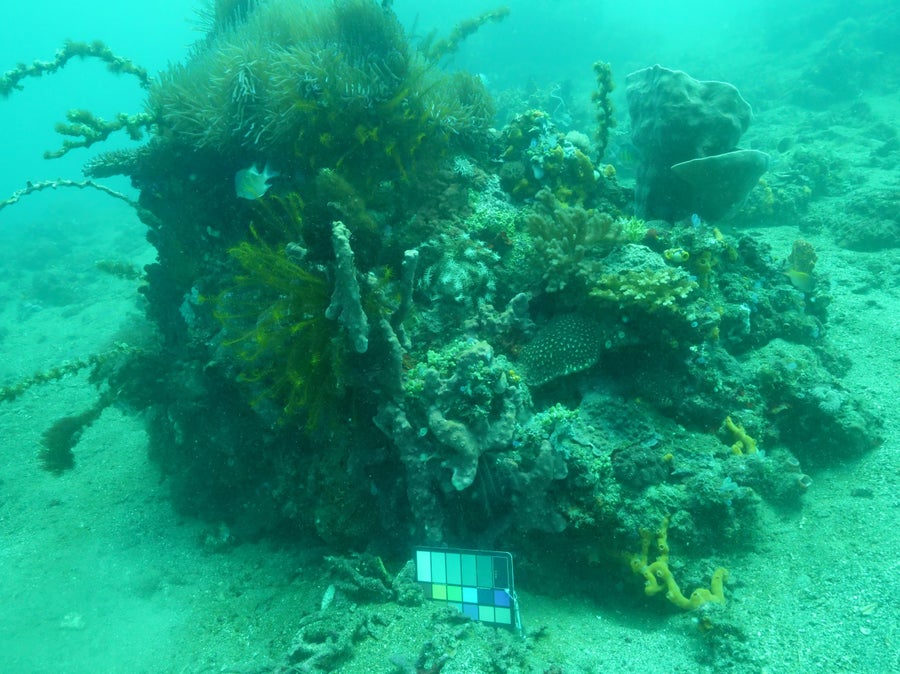

Coral reefs are among nature's most complex and colorful living formations. But as any underwater photographer knows, pictures of them taken without artificial lights often come out bland and blue. Even shallow water selectively absorbs and scatters light at different wavelengths, making certain features hard to see and washing out colors—especially reds and yellows. This effect makes it difficult for coral scientists to use computer vision and machine-learning algorithms to identify, count and classify species in underwater images; they have to rely on time-consuming human evaluation instead.

But a new algorithm called Sea-thru, developed by engineer and oceanographer Derya Akkaynak, removes the visual distortion caused by water from an image. The effects could be far-reaching for biologists who need to see true colors underneath the surface. Akkaynak and engineer Tali Treibitz, her postdoctoral adviser at the University of Haifa in Israel, detailed the process in a paper presented in June at the IEEE Conference on Computer Vision and Pattern Recognition.

The large coral formation as originally photographed, before processing with Sea-thru. Credit: Derya Akkaynak

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Sea-thru's image analysis factors in the physics of light absorption and scattering in the atmosphere, compared with that in the ocean, where the particles that light interacts with are much larger. Then the program effectively reverses image distortion from water pixel by pixel, restoring lost colors.

One caveat is that the process requires distance information to work. Akkaynak takes numerous photographs of the same scene from various angles, which Sea-thru uses to estimate the distance between the camera and objects in the scene—and, in turn, the water's light-attenuating impact. Luckily, many scientists already capture distance information in image data sets by using a process called photogrammetry, and Akkaynak says the program will readily work on those photographs.

“There are a lot of challenges associated with working underwater that put us well behind what researchers can do above water and on land,” says Nicole Pedersen, a researcher on the 100 Island Challenge, a project at the University of California, San Diego, in which scientists take up to 7,000 pictures per 100 square meters to assemble 3-D models of reefs. Progress has been hindered by a lack of computer tools for processing these images, Pedersen says, adding that Sea-thru is a step in the right direction.

.gif)

Credit: Derya Akkaynak

The algorithm differs from applications such as Photoshop, with which users can artificially enhance underwater images by uniformly pumping up reds or yellows. “What I like about this approach is that it's really about obtaining true colors,” says Pim Bongaerts, a coral biologist at the California Academy of Sciences. “Getting true color could really help us get a lot more worth out of our current data sets.”